In virtually all applications I’ve worked on in the past years I heavily relied on unit tests to ensure that the application at hand was working as intended and to give confidence in making changes rapidly without breaking stuff.

Depending on the potential risks and frequency of deployments there were sometimes end-to-end tests and integration tests but the biggest part was always unit tests.

For (almost) every class in the application I’d write unit tests, because I wanted good test coverage. And to make sure that each test was tested in isolation and changes to dependencies of this class did not affect its unit tests I’d create an interface for each dependency, inject them into our class and mock them away in the unit test, usually with some mocking framework like Moq, or NSubstitute.

This was the way I and the teams I worked on did it for a long time. And things were good. Well, sort of.

Mockist vs Classical testing

First, lets take a step back.

Apparently there is a long running discussion going on in the TDD (Test Driven Development) world which is very relevant to what I’m about to discuss: mockist vs classical testing. I don’t want to talk too much about TDD in this post because… TDD, but basically, there are 2 camps:

The mockist camp states that any dependency of the SUT (System Under Test) should be mocked away, so that the SUT is tested in complete isolation. This would make it, among other things, easier to locate defects and would simultaneously improve the design of the code.

The classical camp states that you should use real objects as much as possible and only use mocks when it would be difficult or cumbersome to use a real object, for example an object that interacts with a rate limited web service.

I’m a bit embarrassed, because I’m super late to this party. For the biggest part of my career I’ve been mocking away happily, since this is what I was taught and this is what all the teams I worked on did. I was aware that there were developers out there that did not mock their dependencies but I’ve never given them any attention or thought, you can find crazy opinions on every topic in software development.

Only in the last few years where I started practising DDD (Domain Driven Design) I’ve been mixing in classical testing: the business logic in the domain layer deemed very suitable for tests without mocking because I kept them ‘pure’. Pure meaning: no dependency injection, no dependencies on infrastructure such as databases or file systems and other external services. Testing a single SUT also leads to testing classes that the SUT uses to operate.

The tests for classes in other layers of the application, such as application services and infrastructure still heavily relied on mocks.

Isolated testing

Quite recently I’ve come to realize that isolated testing might not be very good, in fact, it could be harmful to our codebase in a lot of situations.

Let’s say we have a micro service. At the core is the domain where the business logic lives. It makes sense to properly test it using unit tests, covering all, happy and unhappy flows, and edge-cases. Here, you probably won’t see or need a lot of mocking.

The problems start in other layers of the application, around the domain, for example layers that contain a lot of application services. Testing application services in isolation is a nightmare.

Usually, application services are responsible for orchestration: they expose application logic without implementing any business rules themselves. They merely gather all bits and pieces to execute use-cases. Therefore, an isolated test of an application service method will only test that the right mocks are called with the right arguments.

There is obviously some value in this: by testing it we look at the SUT again from a different angle, we are forced to think about how a certain method should react to different parameters and how it should behave when the consumer of the service invokes it in some unsupported way.

It also gives us some confidence in changing code, because if we have good tests we know that we did not forget to call dependencies with invalid parameters, or forget to call them at all.

The theory is that we end up with tested building blocks that we can re-use and move around in our application when we’re refactoring.

Take the following application service for example:

public class ShoppingCartService

{

private readonly IProductService _productService;

private readonly IShoppingCartRepository _shoppingCartRepository;

private readonly IUserService _userService;

public ShoppingCartService(

IProductService productService,

IShoppingCartRepository shoppingCartRepository,

IUserService userService)

{

_productService = productService;

_shoppingCartRepository = shoppingCartRepository;

_userService = userService;

}

public async Task AddProductAsync(int productId)

{

var product = await _productService.GetProductAsync(productId);

var shoppingCart = await GetUserShoppingCartAsync();

shoppingCart.AddItem(product);

await _shoppingCartRepository.SaveAsync(shoppingCart);

}

public Task<ShoppingCart> GetShoppingCartAsync()

{

return GetUserShoppingCartAsync();

}

private async Task<ShoppingCart> GetUserShoppingCartAsync()

{

var user = await _userService.GetLoggedInUserAsync();

return await _shoppingCartRepository.GetShoppingCartForUserAsync(user.Id);

}

}The service has one method to add a product to the currently logged in users’ shopping cart and persist it somewhere. Note that there is no business logic in this specific method, it’s only doing the orchestration for our use case ‘Add Product To Shopping Cart’.

To test this method in isolation with Moq would mean we’d have to write a test that would look something like this:

public class ShoppingCartServiceTests

{

[Fact]

public async Task AddProductAsync_ExistingProduct_ShoppingCartWithAddedProductPersisted()

{

// Arrange

var productId = 100;

var shoppingCart = new ShoppingCart();

var productServiceMock = new Mock<IProductService>(MockBehavior.Strict);

productServiceMock

.Setup(mock => mock.GetProductAsync(productId))

.ReturnsAsync(new Product { Id = productId });

var userServiceMock = new Mock<IUserService>(MockBehavior.Strict);

userServiceMock

.Setup(mock => mock.GetLoggedInUserAsync())

.ReturnsAsync(new User { Id = 5 });

var shoppingCartRepositoryMock = new Mock<IShoppingCartRepository>(MockBehavior.Strict);

shoppingCartRepositoryMock

.Setup(mock => mock.GetShoppingCartForUserAsync(It.IsAny<int>()))

.ReturnsAsync(shoppingCart);

shoppingCartRepositoryMock

.Setup(mock => mock.SaveAsync(It.IsAny<ShoppingCart>()))

.Returns(Task.CompletedTask);

var sut = new ShoppingCartService(productServiceMock.Object,

shoppingCartRepositoryMock.Object, userServiceMock.Object);

// Act

await sut.AddProductAsync(productId);

// Assert

shoppingCartRepositoryMock.Verify(mock => mock.SaveAsync(shoppingCart), Times.Once);

shoppingCart.Items.Should().HaveCount(1);

shoppingCart.Items.Should().ContainEquivalentOf(new ShoppingCartItem { ProductId = productId });

}

}The test is long but quite simple: it verifies that when a product is added to the shopping cart, and the product exists, the shopping cart is persisted with the new product added to it.

The problem

As we discussed earlier there is some value in these kind of tests, but there are also some huge problems.

First of all, writing these kind of tests costs time, not a little, but a lot. They’re hard to write and to read due to the large and cumbersome mock setups and validations. The tests will inevitably become much larger than the code under test.

Furthermore we lose all flexibility in our codebase. Refactoring suddenly becomes a tedious task because every change, either to the application service or one of it’s dependencies will break many tests. This means that after refactoring we have to spend a lot of time figuring out what our tests were supposed to do, if they’re still relevant and how our mocks should be changed to make the tests green again. Our tests are brittle and fragile. And our code on the other hand becomes rigid, inflexible.

These tests effectively pour a layer of concrete over our application, making it a solid, hard to change block with all orchestration logic replicated in our mocks. Refactoring is harder than it was before our unit tests.

Lastly, unit tests like this give us no guarantee that the application actually works correctly. We only know for sure that our mocks are working, but if the mocks don’t match the implementations (anymore), we’re testing an illusion, only giving us a false sense of confidence.

Good tests should help us writing solid (pun not intended) code that can be easily refactored, not the opposite.

How to solve this

In theory, the solution is simple: don’t test in isolation. In practice, it’s a bit more complicated.

One strategy I’ve successfully used to replace isolated (application service) tests are Integration Tests. Now, the name integration test isn’t the best. Ask 10 developers what they think integration testing is and you get 10 different answers: there is no single definition.

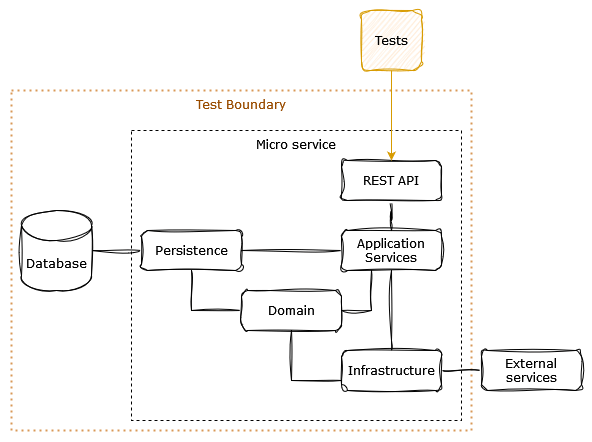

The tests we’re looking at here will test the behavior of the micro service by interacting with it from the outside: on contract level. So we could call it something like Service Behavior Tests. (Please don’t be mad at me for inventing another term for integration tests).

The idea is that from our test we interact with the application just like any consumer of your application would.

If you have a REST API, call the REST API from your tests. For example, if you’re building an ASP.NET Web API, you can use WebApplicationFactory for this. If your application listens to messages from a Message Broker like Azure Service Bus, send messages to it and see if it responds correctly.

And while doing this you want to mock as little as possible. Of course, you need to determine the boundaries of what you want to include in your test and create test doubles for the parts you want to exclude. But for the rest of the application use real implementations as much as possible.

Should the test call other micro services or external services? Probably not, you’ll be creating a system test that could be quite fragile. If the other service is down, your tests fail.

Persistance in a database? Maybe! There are some great tools like Testcontainers that can help us spin up a clean database in our tests and tear it down after the tests complete. This will ensure that the whole application is tested all the way through.

Here is what a test using a REST API going all the way down through all parts of an application, including the database, would look like:

Inside the test boundary is our entire micro service and the database. The database could be started before our tests commence, using Testcontainers. The external services are not part of the scope of our test. These services need to be replaced by a test double, so it could be a mock. In a lot of cases I’ve found it easier to build a small fake, but that really depends on the responsibilities of the external service. In some cases mocks will be more efficient.

Confused about the difference between a fake and a mock? Martin Fowler explains it much better than I can.

The result is that we’re able to run the tests locally as well as in the CI/CD (continuous deployment / continuous delivery) pipeline, just like unit tests. We don’t want to spin up a real environment to run our tests.

Example

Let’s take the example from earlier and see how we can write a “Service Behavior Test” for it.

First, create a small ASP.NET Web API to access the application service:

var builder = WebApplication.CreateBuilder(args);

// Add services to the container.

builder.Services.AddTransient<ShoppingCartService>();

builder.Services.AddTransient<IProductService, ProductService>();

builder.Services.AddTransient<IUserService, UserService>();

builder.Services.AddTransient<IShoppingCartRepository, ShoppingCartRepository>();

var app = builder.Build();

// Map endpoints.

app.MapGet("/cart", async (ShoppingCartService shoppingCartService) =>

await shoppingCartService.GetShoppingCartAsync());

app.MapPost("/cart/add", async (ShoppingCartService shoppingCartService, [FromBody] AddProductRequest request) =>

await shoppingCartService.AddProductAsync(request.ProductId));

app.Run();The web API does nothing more than register a couple of services in the Dependency Injection container and map 2 endpoints to the methods in the ShoppingCartService.

Since this post is not about the technical details of setting up WebApplicationFactory and Testcontainers I’m going to skip over the part of setting that up. If you want to know more about that this video from Nick Chapsas is a great starting point to learn more. Assume we have a database running in a Docker container which has been seeded with some product data and the WebApplicationFactory has been setup to use the containerized database. We also made sure that there is a user available for retrieving the current shopping cart.

We can then write a test that calls the API and verifies that the shopping cart is persisted with the new product added to it, only using the API endpoints:

public class AddProductShoppingCartTests : IClassFixture<WebShopApiFactory>

{

private readonly WebShopApiFactory _apiFactory;

public AddProductShoppingCartTests(WebShopApiFactory apiFactory)

{

_apiFactory = apiFactory;

}

[Fact]

public async Task AddProductToShoppingCart_ValidProduct_ShoppingCartWithProductPersisted()

{

// Arrange

var client = _apiFactory.CreateClient();

var request = new AddProductRequest(ProductId: 1);

// Act

await client.PostAsJsonAsync("cart/add", request);

// Assert

var shoppingCart = await client.GetFromJsonAsync<ShoppingCart>("cart");

shoppingCart!.Items.Should().HaveCount(1);

shoppingCart.Items.Should().ContainEquivalentOf(new ShoppingCartItem { ProductId = request.ProductId });

}

}As you can see the test itself is very simple. We create a client for the API, send a request to add a product to the shopping cart and then verify that the shopping cart has been persisted with the product added to it. It goes through the whole application, from the API to the database.

Why it’s great

By testing like this we gain a couple of important things:

- Bootstrapping is covered, for example setting up the web application host, dependency injection frameworks and configuration system.

- Integration between classes and various layers in the application is covered. Isolated tests cannot do this.

- Unintentional changes to our APIs and contracts are covered.

- Tests are easier to write and read because they’re describing how our application (should) behave. No more piles of mock setups to decifer.

- Application can be refactored without changing tests. If the behavior is the same, the tests must still work.

In the end we can be much more confident that our application is actually working as expected.

Downsides

Of course there are downsides as well.

Setting up the frameworks for integration tests is not trivial. This will take some time, even for experienced developers. But once that’s done, writing the tests themselves should be much easier than writing isolated tests with mocks.

The tests will also be slower than unit tests, depending on the setup. Starting up the whole application and maybe some docker test containers takes time.

You won’t run these in milliseconds, more likely it takes 10s of seconds or even minutes. For me it’s fine if it takes 30 seconds to run all tests. I’ll exclude them from my live unit testing and run them manually when I’m done with my changes. They are also still fast enough to run in our pull request build validation and build pipeline.

Locating problems can also be a bit harder. In isolated unit tests you can usually pinpoint the failing method quickly. But for non-isolated tests, you’ll have to dive into the error to see what went wrong, sometimes having to debug the test and stepping through the application itself. It might seem like a bit of a hassle, but I’ve found that it’s remarkably easy and fast in practice.

Can we use this is every situation?

Probably not, in software development there is never a silver bullet that works for every conceivable situation. But if you develop micro services or even a modular monolith that is mainly operating based on external input, there’s a good chance this could be a good strategy for you.

No more unit tests?

So what does that mean for unit tests, are they out?

Well no. Unit test are still a powerful and indispensable tool. In many cases it’s still very relevant to test our domain layer and other parts of the application properly. With unit tests it’s easier to make fine grained tests that run fast, test a lot of different scenarios and are easy to understand.

Because unit tests are much faster than integration tests you can run them much more frequently: either manually or using some form of live unit testing.

However, when you start seeing a lot of mocks in unit tests it might be a sign that something is wrong and it could be a good idea to take a step back and look at alternative ways of testing those parts of the application.

Final notes

A big catalyst for my shift in perspective was a session at NDC Oslo 2022 by Martin Thwaites, called Building Operable Software with TDD (but not the way you think) . Definitely check it out, it’s well worth your time.